Sci. Publications

This page contains a list of articles in peer-reviewed scientific journals. [BibTex]

| Journals | Conferences | Preprints |

| ~38 | ~17 | ~7 |

Selected

All

Journals

-

An Imprecise SHAP as a Tool for Explaining the Class Probability Distributions under Limited Training Data (2025) [DOI] [ArXiV]

-

SurvBeNIM: The Beran-Based Neural Importance Model for Explaining the Survival Models (2025) [DOI] [ArXiV]

-

Dual feature-based and example-based explanation methods (2025) [DOI] [ArXiV]

-

Ada-naf: semi-supervised anomaly detection based on the neural attention forest (2025) [DOI]

-

Survival concept-based learning models (2025) [DOI]

-

Ensemble-Based Survival Models with the Self-Attended Beran Estimator Predictions (2025) [DOI]

-

Survival Analysis as Imprecise Classification with Trainable Kernels (2025) [DOI] [GitHub] [ArXiV]

-

Predictive models and dynamics of estimates of applied tasks characteristics using machine learning methods (2024) [DOI]

-

Interpretation methods for machine learning models in the framework of survival analysis with censored data: a brief overview (2024) [DOI]

-

BENK: The Beran Estimator with Neural Kernels for Estimating the Heterogeneous Treatment Effect (2024) [DOI] [ArXiV]

-

SurvBeX: an explanation method of the machine learning survival models based on the Beran estimator (2024) [DOI] [ArXiV]

-

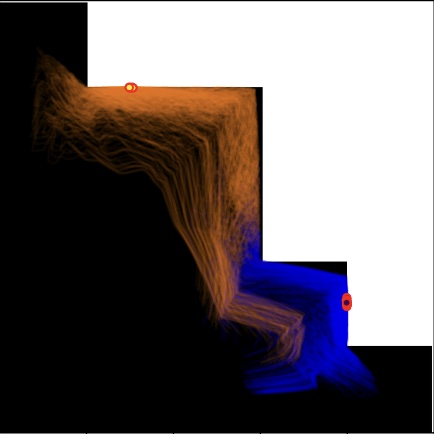

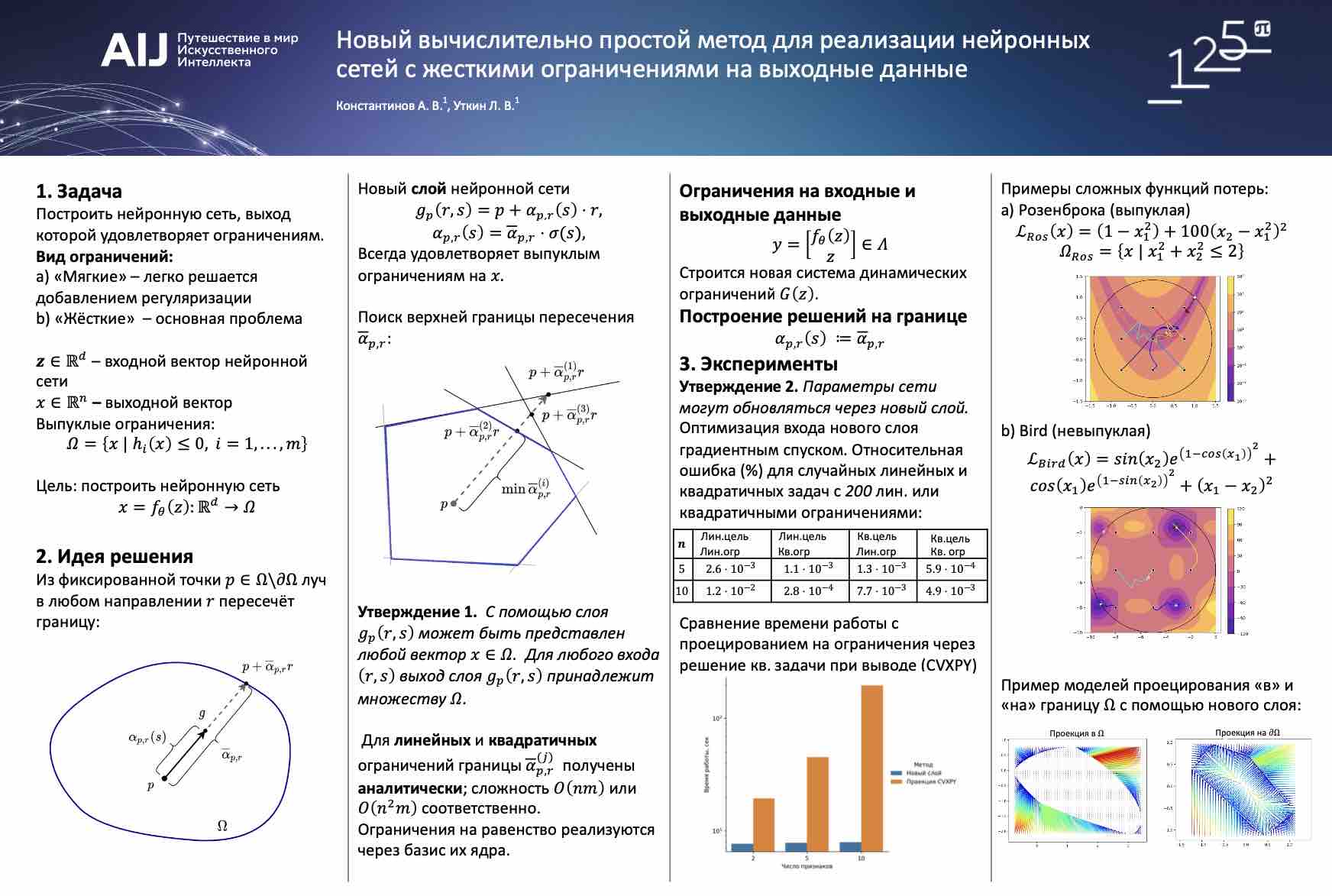

Imposing Star-Shaped Hard Constraints on the Neural Network Output (2024) [DOI] [GitHub]

-

LARF: Two-Level Attention-Based Random Forests with a Mixture of Contamination Models (2023) [DOI]

-

Attention-like feature explanation for tabular data (2023) [DOI] [GitHub]

-

Heterogeneous Treatment Effect with Trained Kernels of the Nadaraya–Watson Regression (2023) [DOI]

-

Attention and self-attention in random forests (2023) [DOI] [GitHub] [ArXiV]

-

Multiple Instance Learning with Trainable Soft Decision Tree Ensembles (2023) [DOI]

-

Attention-Based Random Forests and the Imprecise Pari-Mutual Model (2023) [DOI]

-

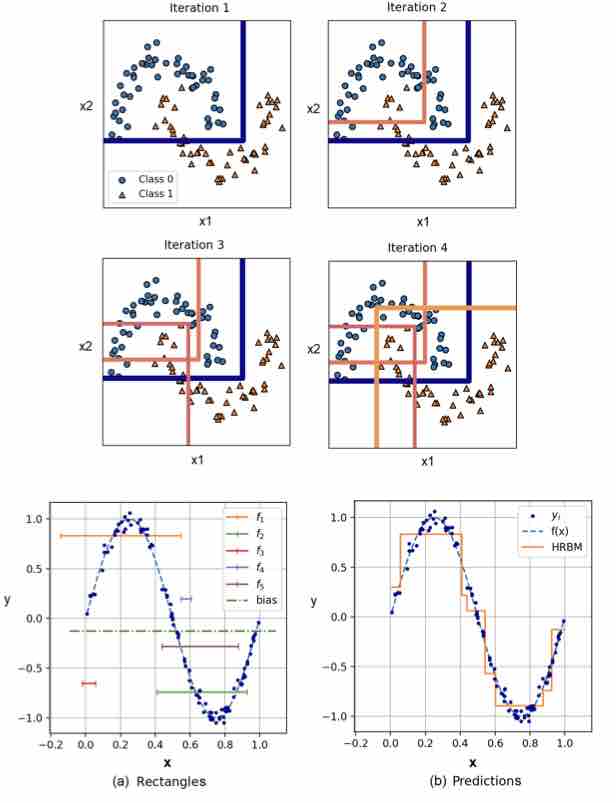

Interpretable ensembles of hyper-rectangles as base models (2023) [DOI] [GitHub] [ArXiV]

-

Flexible deep forest classifier with multi-head attention (2023) [DOI] [GitHub]

-

A new computationally simple approach for implementing neural networks with output hard constraints (2023) [DOI] [GitHub] [ArXiV]

-

Random Survival Forests Incorporated By The Nadaraya-Watson Regression (2022) [DOI]

-

Improved Anomaly Detection by Using the Attention-Based Isolation Forest (2022) [DOI]

-

An Extension of the Neural Additive Model for Uncertainty Explanation of Machine Learning Survival Models (2022) [DOI]

-

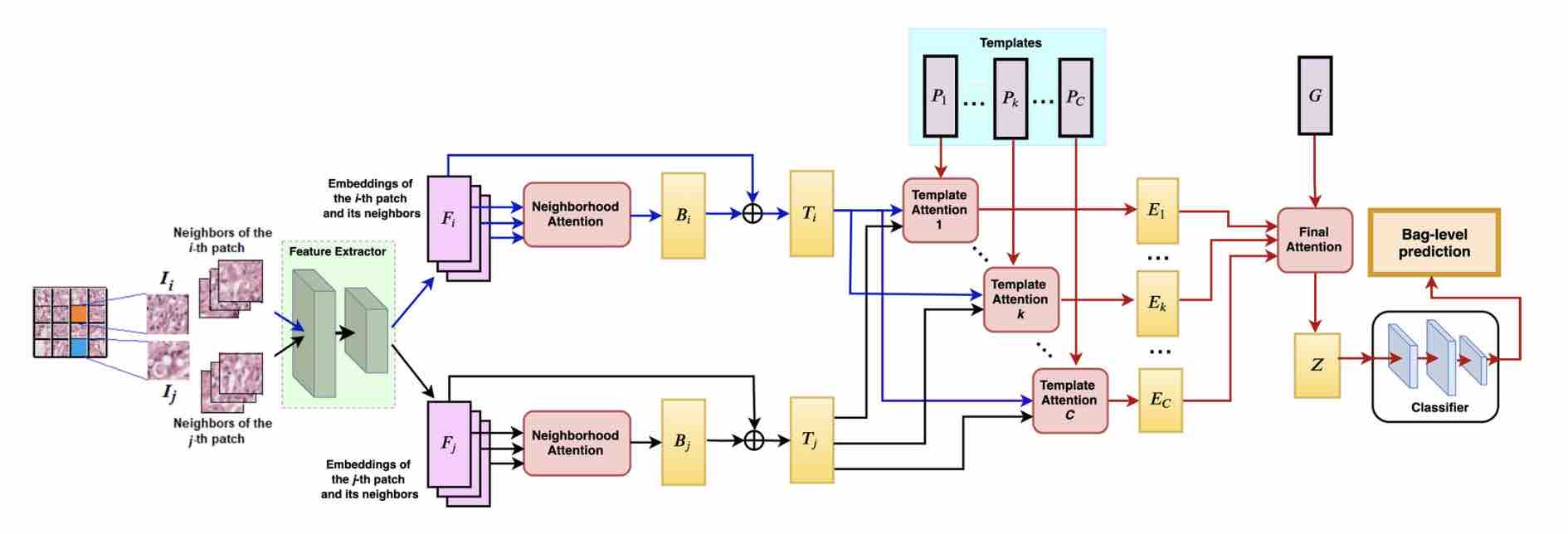

Multi-attention multiple instance learning (2022) [DOI] [GitHub] [ArXiV]

-

SurvNAM: The machine learning survival model explanation (2022) [DOI]

-

Ensembles of random SHAPs (2022) [DOI]

-

Attention-based random forest and contamination model (2022) [DOI]

-

Uncertainty Interpretation of the Machine Learning Survival Model Predictions (2021) [DOI]

-

Deep Gradient Boosting For Regression Problems (2021) [DOI]

-

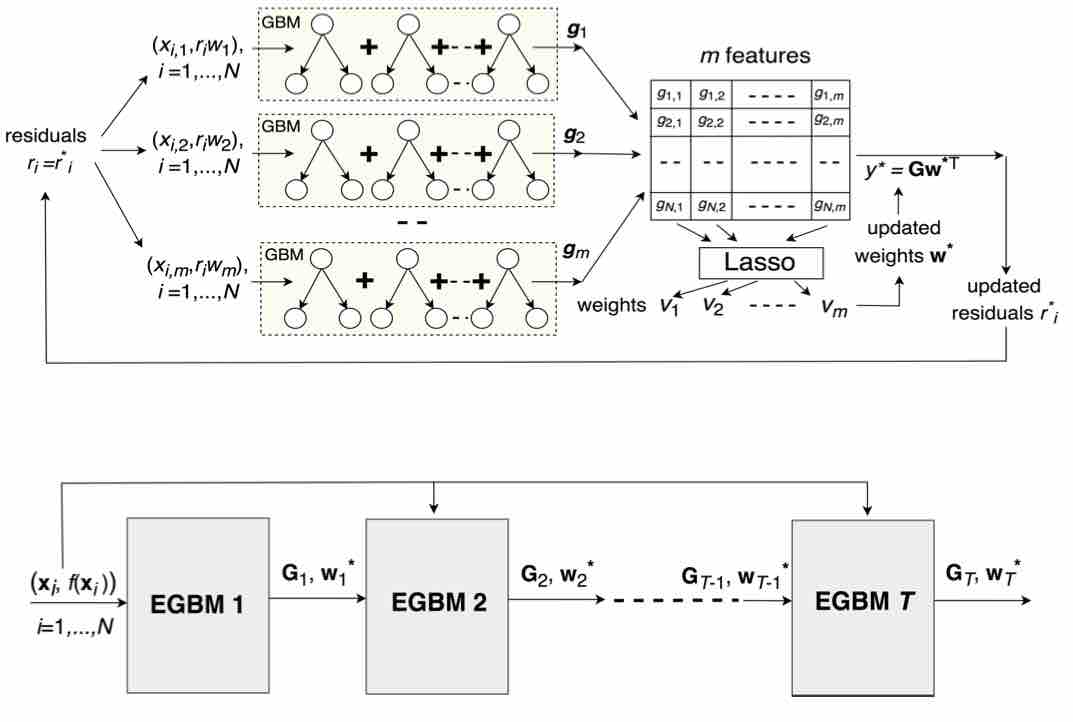

A Generalized Stacking for Implementing Ensembles of Gradient Boosting Machines (2021) [DOI]

-

Counterfactual explanation of machine learning survival models (2021) [DOI]

-

Interpretable machine learning with an ensemble of gradient boosting machines (2021) [DOI] [GitHub] [ArXiV]

-

An Adaptive Weighted Deep Survival Forest (2020) [DOI]

-

Estimation of Personalized Heterogeneous Treatment Effects Using Concatenation and Augmentation of Feature Vectors (2020) [DOI]

-

A new adaptive weighted deep forest and its modifications (2020) [DOI]

-

Deep Forest as a framework for a new class of machine-learning models (2019) [DOI]

Conferences

-

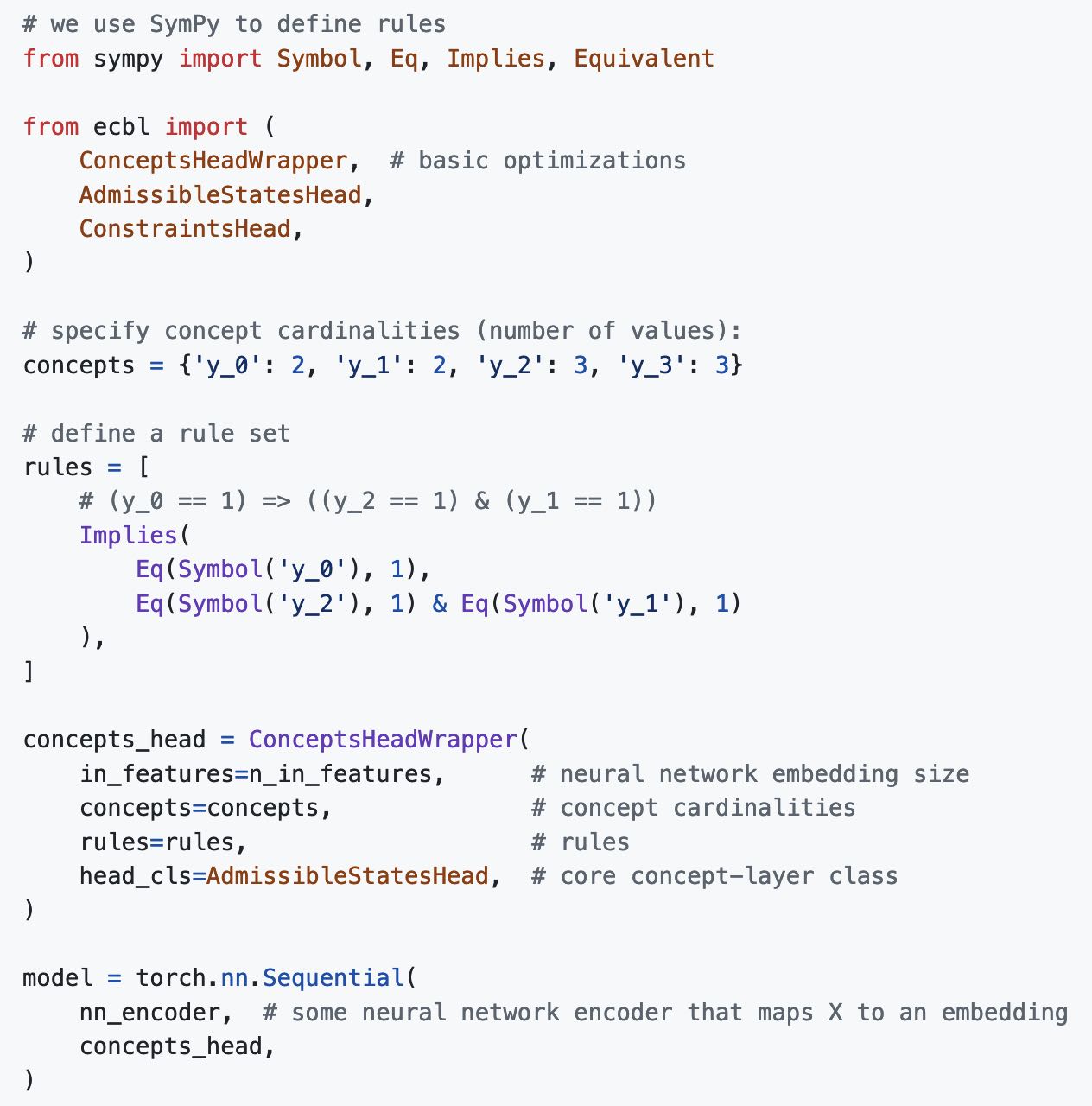

An Explicit Concept-Based Approach for Incorporating Expert Rules into Machine Learning Models (2024) [DOI] [GitHub]

-

Robust Models of Distance Metric Learning by Interval-Valued Training Data (2023) [DOI]

-

Modifications of SHAP for Local Explanation of Function-Valued Predictions Using the Divergence Measures (2023) [DOI]

-

GBMILs: Gradient Boosting Models for Multiple Instance Learning (2023) [DOI]

-

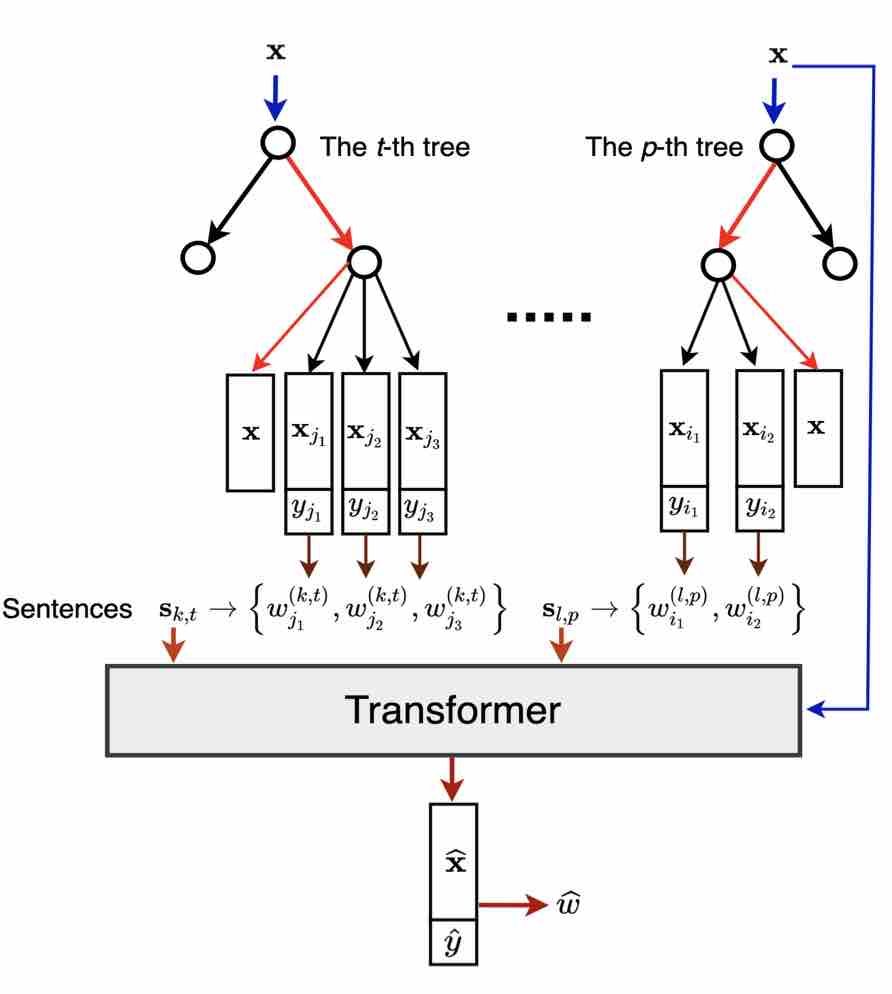

Neural Attention Forests: Transformer-Based Forest Improvement (2023) [DOI] [GitHub] [ArXiV]

-

Random Forests with Attentive Nodes (2022) [DOI]

-

Multiple Instance Learning through Explanation by Using a Histopathology Example (2022) [DOI]

-

An Approach for the Robust Machine Learning Explanation Based on Imprecise Statistical Models (2022) [DOI]

-

AGBoost: Attention-based modification of gradient boosting machine (2022) [DOI]

-

The Deep Survival Forest and Elastic-Net-Cox Cascade Models as Extensions of the Deep Forest (2021) [DOI]

-

Combining an autoencoder and a variational autoencoder for explaining the machine learning model predictions (2021) [DOI]

-

Semi-supervised Learning for Medical Image Segmentation (2021) [DOI]

-

Gradient Boosting Machine with Partially Randomized Decision Trees (2021) [DOI]

-

Адаптивный весовой глубокий лес выживаемости (2020)

-

A Deep Forest Improvement by Using Weighted Schemes (2019) [DOI]

-

Сегментация трёхмерных медицинских изображений на основе алгоритмов классификации (2018)

-

Алгоритмы фиксации уровня и быстрого распространения контура для полуавтоматической сегментации медицинских изображений (2017)

Preprints

-

Generating Survival Interpretable Trajectories and Data (2024) [GitHub] [ArXiV]

-

FI-CBL: A Probabilistic Method for Concept-Based Learning with Expert Rules (2024) [GitHub] [ArXiV]

-

Incorporating Expert Rules into Neural Networks in the Framework of Concept-Based Learning (2024) [GitHub] [ArXiV]

-

Multiple Instance Learning with Trainable Decision Tree Ensembles (2023) [ArXiV]

-

Interpretable Ensembles of Hyper-Rectangles as Base Models (2023) [ArXiV]

-

A New Computationally Simple Approach for Implementing Neural Networks with Output Hard Constraints (2023) [GitHub] [ArXiV]

-

An adaptive weighted deep forest classifier (2019) [ArXiV]